In the coming years, we will pass from the digital era to the quantum era. This article presents the basic elements of quantum computing, one of the technologies with the most disruptive potential.

The digital transformation is changing the world faster than ever. But would you believe that the digital age will soon give way to the quantum era? Digital literacy has been identified as an area where open knowledge and accessible opportunities to learn about technology are urgently needed to address the gaps in social and economic development. Learning the key concepts that underlay the digital age will become even more critical as we face of the imminent arrival of another new technological wave capable of transforming existing models with an amazing speed and power: quantum technologies.

In this article, we compare the basic concepts of traditional computing and quantum computing; and we also begin to explore its application in other related areas.

What are quantum technologies?

Throughout history, humans have developed technology as they go about understanding the workings of nature through science. Between 1900 and 1930, the study of certain physical phenomena that were not yet well understood gave rise to a new theory in the field of physics, Quantum Mechanics. This theory describes and explains how things work in the microscopic world, the scale where we find molecules, atoms, and electrons. Thanks to this theory, it has been possible to explain these phenomena, and understand that the subatomic reality works against our intuition in an almost magical way.

These quantum properties include quantum superposition, quantum entanglement and quantum teleportation.

- Quantum superposition describes how a particle can be in different states at the same time.

- Quantum entanglement describes the way in which two separate particles can be correlated so that, when we interact with one of them, the other becomes aware and reacts to it.

- Quantum teleportation uses quantum entanglement to send information from one place to another in space without the need to travel through it.

Quantum technologies are those which are based on these quantum properties of subatomic nature.

Today, the understanding of the microscopic world through Quantum Mechanics allows us to invent and design technologies capable of improving the everyday lives of people. There are many different technologies that use quantum phenomena and some of them, such as lasers and magnetic resonance imaging (MRI), have been around for more than half a century. However, we are currently witnessing a technological revolution in quantum areas such as quantum computing, quantum information, quantum simulation, quantum optics, quantum metrology, quantum clocks or quantum sensors.

What is quantum computing? The first step is to understand classical computing

To understand how quantum computers work, it is convenient to first explain the underlying logic of the computers we currently use on a daily basis, which we will refer to in this document as digital or classical computers. These, like the rest of the electronic devices like tablets or mobile phones, use bits as their fundamental units of memory. This means that the programs and applications are encoded in bits, that is, the binary language of zeros and ones. Every time we interact with any of these devices, for example by pressing a key on the keyboard, strings of zeros and ones are created, destroyed, and/or modified within the computer.

The interesting question is, what are these zeros and ones physically inside the computer? The zero and one of the bits correspond to electrical current which circulate, or not, through microscopic parts called transistors, which act as switches. When no current flows, the transistor is “off” and corresponds to a bit 0, and when it is on it is “on” and corresponds to a bit 1.

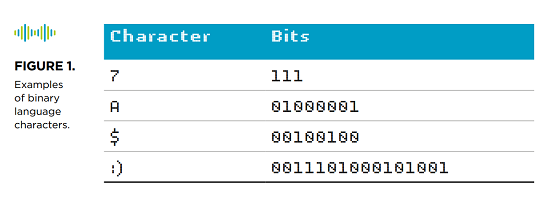

Another way to understand the way bits work is by thinking about the bits as corresponding to holes or gaps, so that an empty gap is a bit 0 and a filled gap is a bit 1. What fills these holes are, in fact, electrons, which is why computers are called electronic devices. As an example, Figure 1 shows some characters written in binary language.

Now that we have an idea of how classical computers work, let’s try to understand how quantum computing works.

From bits to qubits

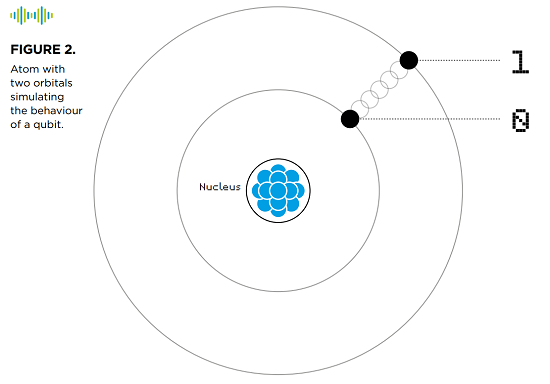

Similar to bits in classical computing, the basic unit of information in quantum computing is the quantum bit, or qubit. Qubits are defined as two-level quantum systems. They, like classical bits, can be at the lower level, marked by a state of low excitation or energy, defined as 0, and can also be at the higher level, marked by a state of higher energy or excitation, defined as 1. The fundamental difference here, in comparison to the binary nature of classical computing, is that qubits can also be at any of the infinitely many intermediate levels or states between 0 and 1 so that they are no longer binary as is the case with classical bits. For example, they can be in a state that is half 0 and half 1, or three-quarters 0 and one-quarter 1. This phenomenon of qubits being in intermediate states is an example of superposition, which stems from an important property of quantum systems known as quantum superposition. From a computational perspective, this allows us to make some specific calculations exponentially faster.

Quantum algorithms mean exponentially more powerful and efficient computation

The purpose of quantum computers is to take advantage of the quantum properties of qubits, as quantum systems, to be able to run quantum algorithms that use superposition and entanglement to offer a much greater processing capacity than classical computers. It is important to indicate that the true paradigm shift does not consist of doing the same thing as the current digital or classical computers, only faster, as can be erroneously read in many articles. Rather, quantum algorithms allow certain operations to be completed in a totally different way, which in many cases turn out to be exponentially faster, by exponentially reducing time or computational resources needed.

Let’s take a look at a concrete example of what this implies. Imagine we are in Bogotá and we want to know what is the best route to Lima among a million different options for getting there (N=1,000,000). In order to be able to use computers to find the optimal path we need to digitize 1,000,000 options, which means translating them into a bit language for the classical computer and qubits for the quantum computer. While a classical computer would need to go one by one analyzing all the paths to find the desired one, a quantum computer takes advantage of the process known as quantum parallelism that allows you to consider all the paths at once. This implies that, although the classical computer needs the order of N/2 steps or iterations, in other words, 500,000 attempts, the quantum computer will find the optimal path after only √N operations on the record, that is, 1,000 attempts.

In the previous case, the advantage is quadratic, but in other cases it is even exponential, which means that with n qubits we can obtain a computational capacity equivalent to 2n bits. To exemplify this, it is common to say that with 270 qubits you could have more base states in a quantum computer – more different and simultaneous strings of characters – than the number of atoms in the universe, which is estimated around 280. Another example estimates that a quantum computer between 2,000 and 2,500 qubits could break virtually all the cryptography used today (known as public key cryptography).

Why is it important to know about quantum technology?

We are at a moment of digital transformation in which different emerging technologies such as blockchain, artificial intelligence, drones, the Internet of things, virtual reality, 5G, 3D printers, robots and autonomous vehicles are increasingly present in multiple areas and sectors. These technologies, called upon to improve the quality of life of human beings by accelerating development and generating social impact, are advancing in parallel today. Only rarely do we see companies developing products that exploit combinations of two or more of these technologies, such as blockchain and IoT or drones and artificial intelligence. Although they are destined to converge, generating an exponentially greater impact, the initial stage of development in which they find themselves and the shortage of developers and people with technical profiles make their convergence still an incomplete task.

Quantum technologies, due to their disruptive potential, are expected not only to converge with all of these new technologies, but to have a transversal influence on practically all of them. Quantum computing will threaten existing systems of authentication and the exchange and secure storage of data, with a greater impact on those technologies in which cryptography has a more relevant role, such as cybersecurity and blockchain. To a lesser extent, quantum with have a minor negative impact on technologies such as 5G , IoT or drones, but still an impact worth considering.

Abierto al Público will feature concrete examples of how the application of quantum technologies is disrupting various sectors and how open knowledge can support the preparation for this disruption. Meanwhile, we invite you to learn in greater depth about this topic by downloading the publication Quantum Technologies: Digital transformation, social impact, and cross-sector disruption.

Dozens of quantum computer simulators are available online using different existing programming languages including C, C++, Java, Matlab, Maxima, Python and Octave. Also, there are new languages such as Q# launched by Microsoft. You can explore and play with a virtual quantum computer through platforms such as the one from IBM and Rigetti.

Hola Marcos! Conoces lo que estamos promoviendo desde PERA Complexity? Un esfuerzo global de generar tecnologías basadas en ciencias de la complejidad, con participación latinoamericana.