At the IDB we are motivated to learn how open source tools for text analytics and other technology can help guide the task of finding relevant knowledge. With that in mind, we teamed up with the Institute for the Future to create SmartReader, a tool to share with others interested in open source collaboration and artificial intelligence.

If you have ever worked on a literature review, we’re sure you know the feeling: you are hunkered down, buried in a pile of journals, books, and open browser windows, trying to make sense of it all and keep track of the winding threads of the topic you’re researching. You’re on the fifth document, which the author didn’t bother to write in a very engaging way. But you can’t skip it; after all, what if the missing insight you’ve been searching for is hidden in its depths? So, you read a page, but it doesn’t stick, so you read the same page again. Finally, you read it a third time and you turn the page. Your mind is wandering. Should you go back or just read something else? What if you miss something important?

The SmartReader could be your answer. It’s an experiment in using natural language processing techniques to make the literature review process more efficient, keep your research on track, and help point out key arguments you might have otherwise missed in the reading. The prototype version of the text analytics tool and its code in Python are now open and available to the public as part of the IDB’s Code for Development initiative.

What does the SmartReader do?

The SmartReader takes a body of text documents you have collected to support a specific research question and in minutes, generates insights for your literature review.

The results include:

Keywords

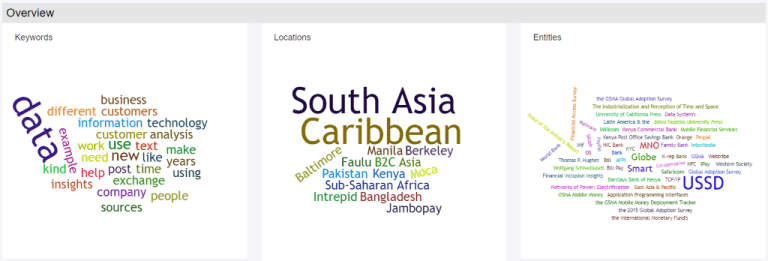

Word maps of the most relevant words, entities, and locations at the level of the overall topic, as well as at the level of each subtopic that you specify.

Relevant Content

A list of sentences highly relevant to a specific subtopic, as well as unique. These sentences are also linked to and highlighted in the source text so that you can explore them in context.

Now I am sure you are thinking, “I need this very much!” And you are right! But first, let’s go over the mechanism that makes all this magic happen in more detail.

How do I use the SmartReader?

First, you must formulate a research question such as “How will technology impact the informal economy over the next decade?”

Next, you collect a set of publications (a corpus of documents) that seem relevant to the chosen question, just like you would for a literature review. Then, to set up a framework for analyzing the corpus, you identify a main topic (e.g. “Informal Economy”) and a set of relevant subtopics (e.g. “innovation, productivity, blockchain, and taxation”) and give them to the SmartReader. With these inputs, the SmartReader queries Google to contextualize the subtopics and uses the real-time results to generate a model. Last, it compares the model to our corpus and extracts the most salient terms and entities, while highlighting phrases within the documents containing relevant and unique information. Here’s a more detailed description of the process:

Step 1: Model Definition

This is where you tell SmartReader what topic you’re interested in, and what sub-topics you want to focus on within that topic, in order to define the model. “What is a model?” you ask. Well, in this context, it is a set of keywords built based on the results of a Google search, and weighted by their relevance to our research question.

Step 2: Model Status

The second step is checking the Model Status to see if the SmartReader has finished creating the model based on the topic and subtopics you told it you were interested in exploring. A model’s status is “Queued” immediately after the subtopics are submitted, “Processing” while the Google search and content analysis are underway, and “Done” when the model is created and ready to be used.

Step 3: Model Application

Once the model is created, you can to tell SmartReader to use it to analyze a set of documents. All you have to do is upload a .zip file with the documents in .txt format, and choose from a drop-down list which model to use to analyze the corpus. Now the magic happens!

Step 4: View Results

Lastly, you’re ready to see the Results! Word maps of the most relevant keywords, locations, and entities for each of the subtopics as well as for the overall corpus. Below each of the subtopics, you’ll also see a list of sentences worth checking out, with links back to their location in the source text. Oh, and you can also download the results in .json if that’s your thing.

Now It is YOUR hands! How will you help improve this text analytics tool?

Now It is YOUR hands! How will you help improve this text analytics tool?

We know that the tool can be refined and become a useful time-saver for researchers and curious minds all over, so we have made SmartReader available via Code for Development as an open source tool for text analytics in Python 3.6.5 and we could not be more eager to hear about your experience with it! You’ll find installation instructions, a user guide and other documentation that will help you set it up and experiment. And if you love programming in python and are interested in getting your hands dirty right now, we’ve already compiled a backlog of improvements to work on, such as making the model results visible, incorporating Google Scholar, tweaking the query strings used to create the model, and improving scoring for the model.

Did we hear you say “challenge accepted”?

By Kyle Strand and Daniela Collaguazo from the Knowledge, Innovation, and Communication Sector of the IDB and Seaford Bacchas from the University of the West Indies, Mona

Seaford Bacchas

Seaford Bacchas is a graduate student at the University of the West Indies Mona. He completed a BSc (Hons) in Software Engineering at the same institution and is currently pursuing an MPhil in Computing. His research focuses on Big Data analysis on parallel computing.

Leave a Reply